The End of IFTTT? How Local-First AI Agents are Building the Truly Smart Home

January 13, 2026

Introduction: The Fragility of the Cloud-Connected Home

For years, the smart home has been powered by a simple mantra: "If This, Then That." Platforms like IFTTT, Zapier, and the native apps from Google, Amazon, and Apple have allowed us to stitch together our devices into useful routines. When your motion sensor detects movement, it tells a cloud server, which then tells your smart light to turn on. It's a model that has served us well, but it has inherent weaknesses:

- Cloud Dependency: If your internet connection goes down, your smart home becomes dumb. Routines fail, and you're back to flipping switches.

- Latency: The round-trip from sensor to cloud and back to device can introduce noticeable delays. It's the difference between a light turning on instantly and a frustrating one-second pause.

- Privacy: Every event, every routine, every interaction with your home is logged and processed on a server owned by a third party. You are trusting them with the intimate details of your daily life.

- Limited Intelligence: Most cloud-based routines are simple triggers. They lack context. They can't understand why you walked into a room, only that you did.

The next evolution of the smart home is the Local-First AI Agent. This architectural model runs the "brain" of your smart home—the AI—directly within your own network. By combining local communication protocols like MQTT with the power of locally-run Large Language Models (LLMs), we can build a home that is faster, more private, and genuinely intelligent.

This guide will walk you through the architecture and implementation of a local-first smart home AI agent.

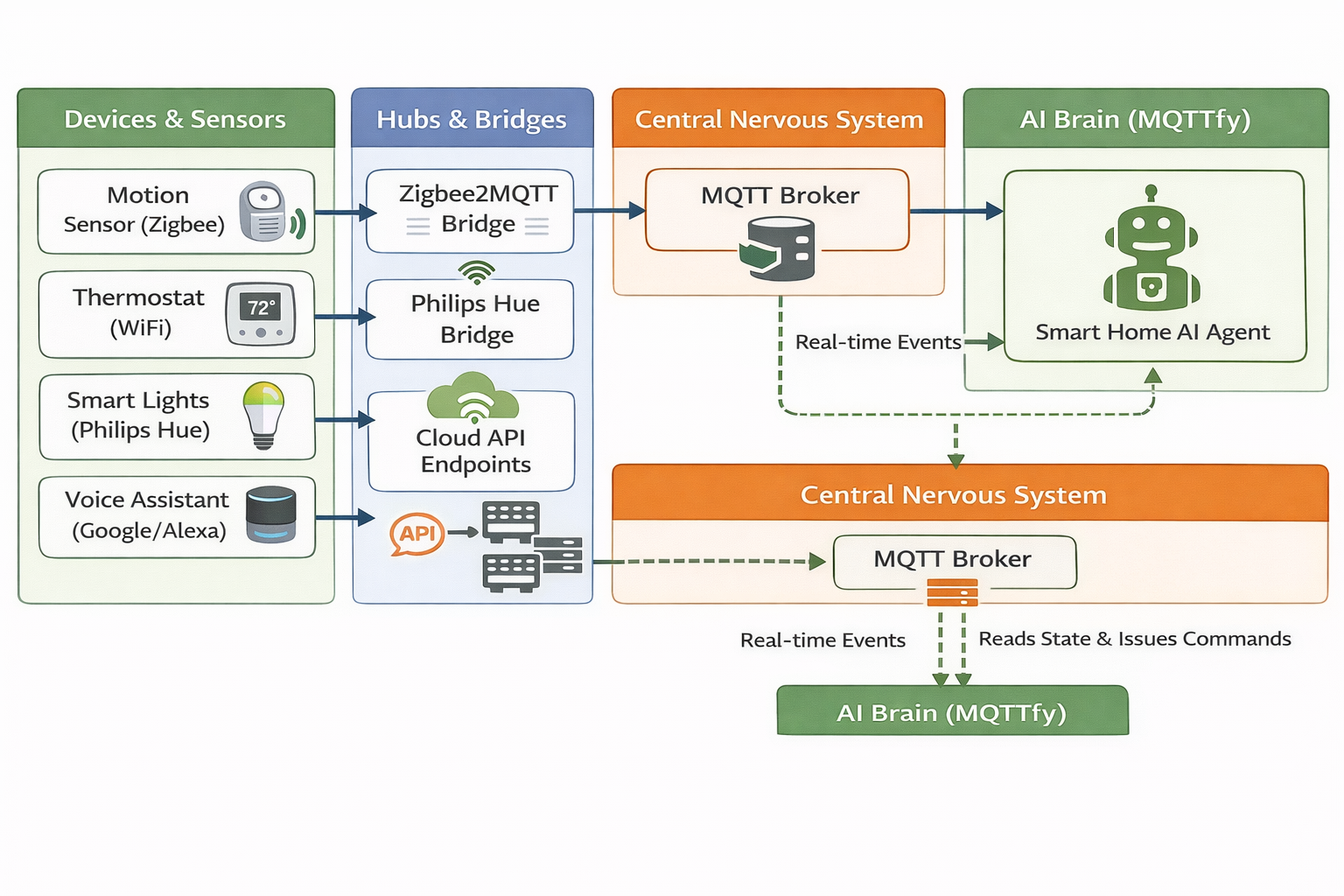

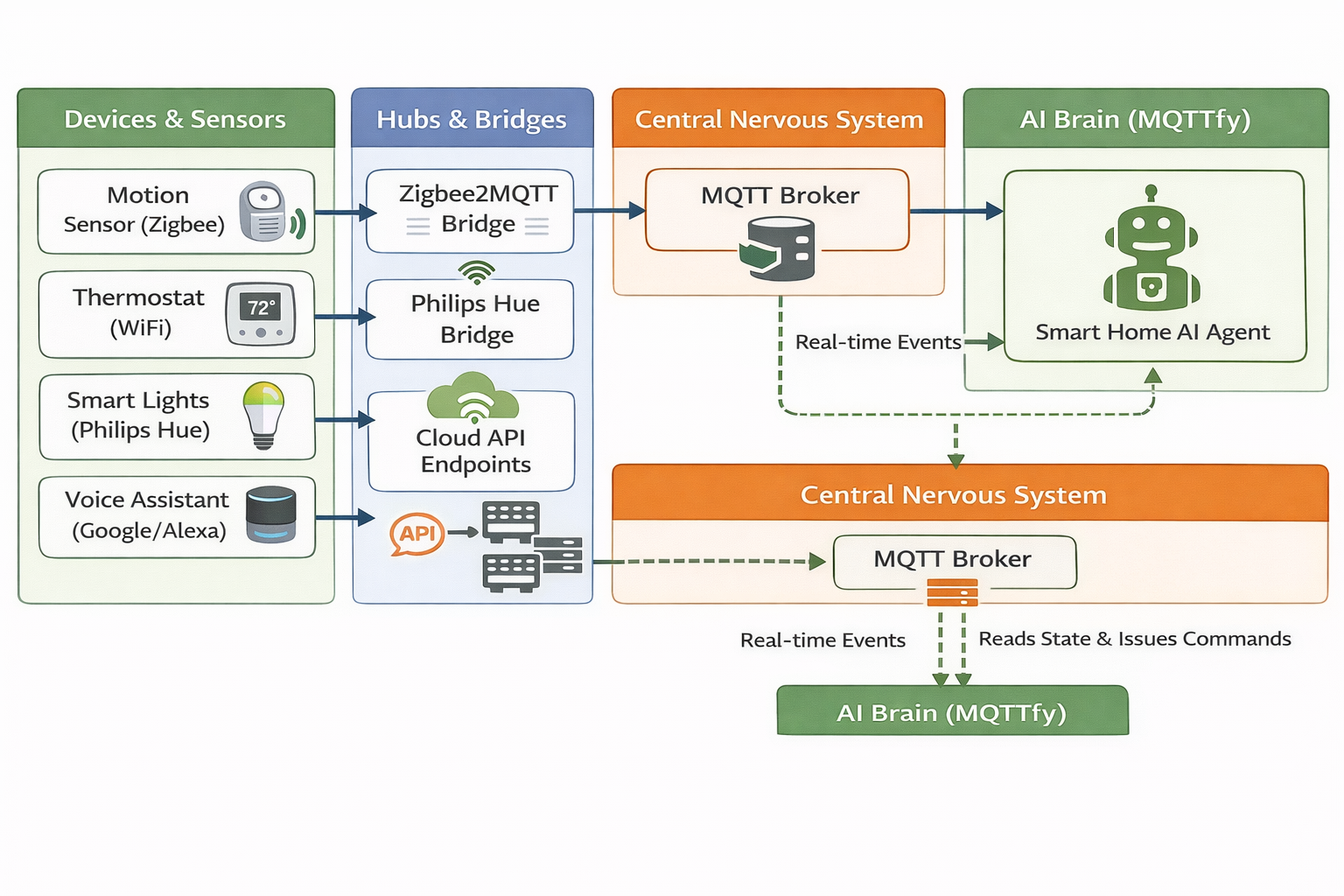

The Local-First Smart Home Architecture

The core of this architecture is to minimize reliance on external cloud services for critical home functions. The AI agent acts as a central hub, communicating directly with devices on the local network.

Key Components:

- Local AI Agent Host: A small, always-on computer on your network. A Raspberry Pi 5, an Intel NUC, or an old laptop are all excellent candidates. This machine runs the AI agent software (e.g., MQTTfy).

- MQTT Broker: The local post office for messages. Free, open-source brokers like Mosquitto are easy to set up. All your MQTT-enabled devices will communicate through this broker, never needing to send data over the internet.

- Local LLM: This is the reasoning engine. Tools like Ollama allow you to download and run powerful LLMs (like Llama 3 or Phi-3) directly on your hardware. When the AI agent needs to make a decision, it queries this local model.

- Devices with Local Control: The critical element. This includes devices that use protocols like MQTT, Zigbee (via a coordinator), Z-Wave, or have a local HTTP API. The goal is to avoid devices that only work via their manufacturer's cloud.

- Cloud APIs (The Exception): The agent can still securely access the internet for non-critical, data-enrichment tasks, like fetching the weather forecast or playing a Spotify playlist. The key is that the core logic (

if motion, then light) remains local.

Building an Intelligent Scene Controller AI Agent

Let's design an agent that goes beyond a simple IFTTT routine. Instead of just turning on a light, this agent will set a "Movie Night" scene, but with contextual intelligence.

Agent Goal (System Prompt):

"You are the 'Movie Night' AI agent. Your goal is to create the perfect movie-watching environment. You are triggered when a user says 'start movie night' or when the living room TV is turned on after 8 PM. When triggered, you must perform the following sequence: 1. Dim the main living room lights to 20% brightness. 2. Set the accent lights to a deep blue color. 3. Check the current room temperature. If it is above 70°F, set the thermostat to 68°F. 4. Finally, confirm that all actions were successful and log the completion."

Agent Tools (all configured for local network addresses):

- Main Lights (MQTT Tool): Publishes to

home/lights/living_room/main/command. - Accent Lights (MQTT Tool): Publishes to

home/lights/living_room/accent/command. - Check Temperature (MQTT Tool): Subscribes to

home/thermostat/statusto read the current temperature. - Set Thermostat (MQTT Tool): Publishes to

home/thermostat/command. - Check TV Status (API Tool): Makes a GET request to the TV's local API

http://192.168.1.100/api/status.

The Autonomous Workflow in Action

It's 8:30 PM. Someone turns on the living room TV.

-

Trigger: An external script (or another agent) monitoring the TV's status sees the change. It publishes a message to an MQTT topic like

home/events, e.g.,{"event": "tv_on", "room": "living_room"}. The Movie Night agent is triggered by this message. -

Reasoning (Goal Analysis): The agent's AI core receives the trigger. It checks its system prompt and the current time. The conditions are met: the TV is on, and it's after 8 PM.

-

Local LLM Consultation: The agent might pass its goal, the trigger data, and its available tools to the local LLM. It asks, "Based on my goal and this trigger, what is my sequence of actions?" The LLM, running on your own hardware, responds with the logical steps.

-

Tool Execution (Sequence):

- It selects the Main Lights tool and publishes

{"brightness": 20}to the corresponding topic. - It selects the Accent Lights tool and publishes

{"color": "blue"}. - It accesses the last known message from the

home/thermostat/statustopic. Let's say the payload is{"temperature": 72}. - The agent's logic (or the LLM) determines that 72 is greater than 70. It selects the Set Thermostat tool and publishes

{"setpoint": 68}.

- It selects the Main Lights tool and publishes

-

State Confirmation & Conclusion: The agent might listen for

.../statusupdates from the lights and thermostat to confirm its commands were received. Once verified, it logs its final status: "Movie Night scene successfully activated. Dimmed main lights, set accents to blue, and adjusted thermostat to 68°F."

Why This is a Paradigm Shift

This local-first model offers transformative benefits:

- Speed: Actions are nearly instantaneous. The message from your sensor travels to the local broker and to the local agent in milliseconds, not across the country and back.

- Privacy: Your personal habits and home status never leave your network. The AI's "brain" is entirely contained within your four walls. The only data sent to the cloud is what you explicitly permit (e.g., asking for a weather forecast).

- Reliability: Your smart home works perfectly even if your internet service provider has an outage. The core logic is completely self-sufficient.

- True Intelligence & Flexibility: Because the agent uses a powerful LLM for reasoning, its behavior is not rigidly coded. You can change its goal with a simple sentence. You could add, *"But if it's a Sunday, set the lights to green instead."

* The agent can understand and adapt without needing a developer to write newif-then` statements.

Conclusion: Your Home, Your Intelligence

The smart home revolution promised a home that anticipates our needs and responds intelligently to our presence. While cloud-based services gave us a taste of this, the local-first AI agent model is poised to finally deliver on that promise. By taking ownership of our data and the AI that processes it, we can build smart homes that are not only more powerful and responsive but also fundamentally more private and secure. It's a shift from renting intelligence from the cloud to owning it in your home.