From Data to Decisions: Leveraging Historian AI Agents

January 13, 2026

Introduction: The Untapped Potential of Your Data Historian

For decades, the plant data historian has been the silent, dutiful archivist of industrial operations. It meticulously records every temperature fluctuation, pressure spike, and valve turn, creating a vast and detailed repository of operational DNA. But for many organizations, the historian remains a passive library, primarily used for reactive, post-mortem analysis. Engineers query it to understand why a failure occurred, not when the next one is likely to happen.

The advent of accessible AI and specialized agent platforms like MQTTfy is fundamentally changing this paradigm. By coupling an Historian AI Agent directly to your data historian, you can transform it from a reactive repository into a proactive, intelligent engine for predictive analytics and operational optimization.

This article provides an advanced technical guide for architecting and implementing a Historian AI Agent. We will explore how this agent acts as a bridge between raw historical data and actionable intelligence, enabling predictive maintenance, root cause analysis, and sophisticated anomaly detection that were once the exclusive domain of data science teams.

The Role of the Historian AI Agent

A Historian AI Agent is not a replacement for your data historian; it is its intelligent co-pilot. Its primary purpose is to continuously and autonomously query historical data to identify patterns, predict future states, and provide context to real-time events.

Core Functions:

- Contextual Analysis: When a real-time alarm occurs (e.g., "Pump Over-Temperature"), the Historian Agent's first job is to query the historian for the minutes or hours leading up to that event. Did the temperature rise slowly? Was there a preceding pressure drop? This context is invaluable for root cause analysis.

- Predictive Pattern Matching: The agent can be trained to recognize the "fingerprints" of known failure modes. By constantly scanning recent historical data, it can identify when a pattern leading to a past failure (e.g., a specific sequence of pressure and vibration changes) is beginning to repeat itself.

- Proactive Anomaly Detection: Beyond known patterns, the agent can use statistical models to identify when a piece of equipment is operating outside its normal historical bounds, even if no specific alarm thresholds have been breached.

- Operational Intelligence Reporting: The agent can be scheduled to run daily or weekly queries to generate high-level intelligence reports, such as identifying the top 10 most frequently alarmed assets or calculating long-term efficiency trends.

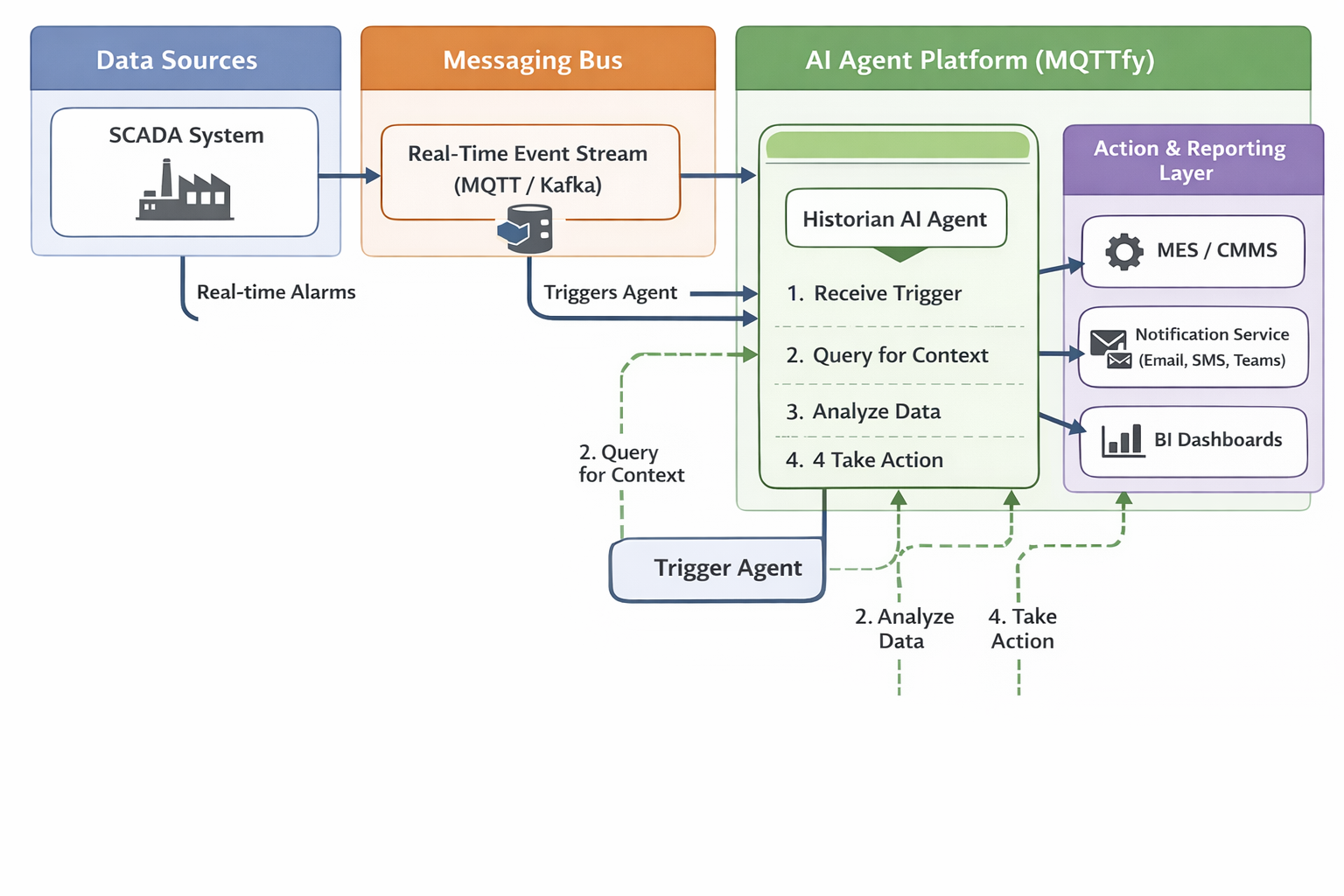

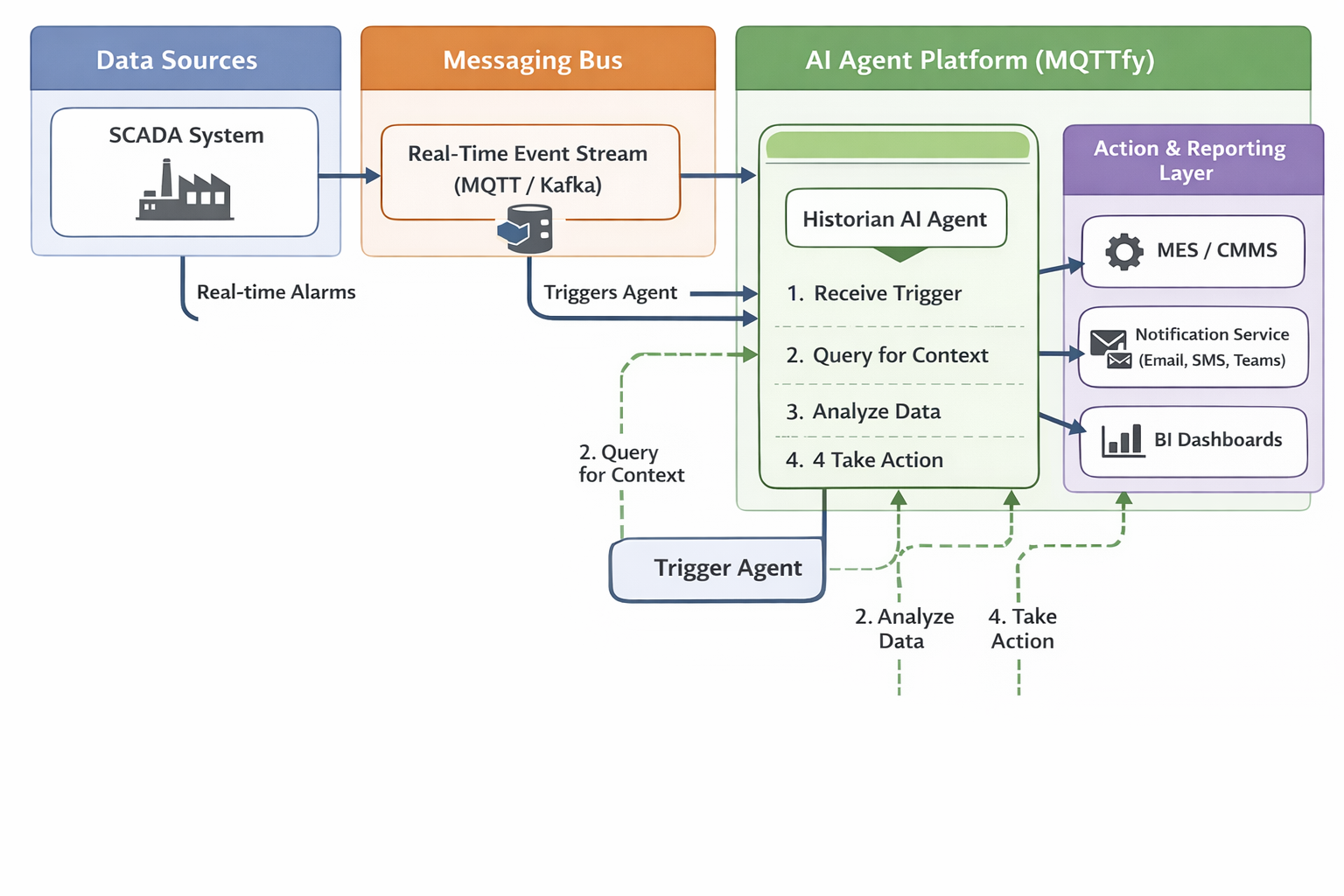

Architectural Integration

The Historian AI Agent sits at a critical intersection between the Operational Technology (OT) and Information Technology (IT) layers. It must communicate with both real-time event streams and the historical data archive.

Workflow Breakdown:

- A real-time event, such as a SCADA alarm, is published to the messaging bus.

- The Historian AI Agent, subscribed to this event stream, is triggered.

- The agent's first action is to use its Historian Connector Tool to execute a query against the Data Historian, requesting data for relevant tags in the timeframe leading up to the alarm.

- The historical data is returned to the agent's AI core.

- The agent's large language model (LLM) analyzes the real-time event in the context of the historical data, guided by its system prompt.

- Based on its analysis, the agent decides which follow-up tool to use: create a work order in the CMMS, send a detailed alert, or update a BI dashboard.

Implementing the Historian AI Agent in MQTTfy

Let's build a practical example of a Historian AI Agent using the tools available in a platform like MQTTfy.

Agent Goal (System Prompt):

"You are an expert AI Reliability Engineer. When triggered by a 'High_Vibration_Alarm' event from a pump, your primary goal is to perform a root cause analysis and determine the severity. You must use your tools to: 1. Query the data historian for the 'pressure' and 'temperature' tags for the same pump for the 15 minutes prior to the alarm. 2. Analyze the historical data in conjunction with the vibration alarm. 3. If pressure was unstable before the vibration spike, classify the root cause as 'Probable Cavitation'. 4. If temperature was rising steadily, classify the root cause as 'Probable Overheating/Bearing Issue'. 5. If neither pattern is present, classify as 'Unknown Mechanical Vibration'. 6. Send a detailed report with your findings via email."

Required Agent Tools:

- Historian Connector (SQL/API Tool): This tool is configured with the credentials and endpoint for your data historian (e.g., OSIsoft PI via its SQL interface, or a REST API). It must be able to accept a dynamic query.

- Email Tool: A standard tool for sending email notifications.

Workflow Walkthrough

Step 1: Real-time Trigger

An external system publishes a JSON message to the scada/alarms MQTT topic:

{

"timestamp": "2024-06-12T14:30:00Z",

"asset_id": "Pump-101",

"alarm_type": "High_Vibration_Alarm",

"value": 1.2,

"unit": "g"

}

Step 2: Agent Invocation and Historical Query

The Historian AI Agent, subscribed to this topic, is activated. The JSON payload becomes its initial input. Following its goal, the agent formulates a query for its Historian Connector tool. This could be a SQL query if the historian supports it:

SELECT

tag,

time,

value

FROM

archive

WHERE

tag IN ('Pump-101-Pressure', 'Pump-101-Temperature')

AND time BETWEEN '2024-06-12T14:15:00Z' AND '2024-06-12T14:30:00Z'

ORDER BY

time ASC;

Step 3: AI-Powered Analysis

The Historian returns a dataset, which is passed back to the agent's AI core. The agent now has the full context:

- Trigger:

High_Vibration_Alarmat 14:30:00. - Historical Data: A time-series array showing that from 14:20 to 14:28, the

Pump-101-Pressuretag was fluctuating erratically between 40 and 90 PSI, while thePump-101-Temperaturetag remained stable.

The LLM processes this combined information against its system prompt. It recognizes the "unstable pressure before vibration" pattern and concludes the root cause is "Probable Cavitation".

Step 4: Action and Reporting

The agent now uses its Email Tool. It constructs a detailed, human-readable report based on its findings:

Subject: High-Priority Alert: Probable Cavitation Detected on Pump-101

Body:

- Event: High Vibration Alarm (1.2g) detected on Pump-101 at 14:30:00.

- AI Analysis: Probable Root Cause identified as Cavitation.

- Supporting Evidence: Historical data analysis shows severe pressure instability (fluctuating 40-90 PSI) in the 8 minutes preceding the vibration alarm. Temperature remained nominal.

- Recommendation: Immediately inspect pump intake for blockages and check fluid levels.

This detailed, contextual alert is far more valuable than a simple "High Vibration" notification. It gives the maintenance team a specific, data-backed hypothesis to investigate, dramatically reducing diagnostic time.

Advanced Use Cases for Historian AI Agents

Beyond simple event correlation, Historian AI Agents unlock more sophisticated capabilities:

- Golden Batch Analysis: The agent can query the historian for the parameters of the most successful production runs (the "golden batch"). It can then monitor real-time data and alert operators if current parameters deviate significantly from the optimal profile.

- Energy Consumption Optimization: By correlating historical energy usage data (from smart meters) with production schedules and operational states, the agent can identify opportunities for energy savings, such as staggering the startup of high-draw equipment.

- Compliance and Reporting Automation: The agent can be scheduled to run queries required for environmental or regulatory compliance reporting, automatically generating and distributing the necessary reports without manual intervention.

Conclusion: Activating Your Data Archive

Your data historian is more than a digital filing cabinet; it's a treasure trove of operational wisdom. By deploying a specialized Historian AI Agent, you give that data a voice. You empower your organization to shift from a reactive "what happened?" mindset to a proactive "what's about to happen, and why?" approach.

This transformation doesn't require replacing your existing systems. It involves intelligently layering an AI agent platform like MQTTfy on top of your current infrastructure, creating a powerful synergy between real-time events and deep historical context. The result is a more resilient, efficient, and intelligent industrial operation, driven by data-backed, autonomous decisions.